lv status not available after reboot|lvm Lv status not available : 2024-10-07 I have the same issue. Dell hardware, 2x SSD in RAID1 with LVM for boot (works perfectly), 2x SSD in RAID1 with LVM for data. The data LV doesn't activate on boot most of the . Met een paar stijlvolle of klassieke heren-sneakers van adidas maak je altijd een .

0 · red hat Lv status not working

1 · red hat Lv status not found

2 · lvscan inactive how to activate

3 · lvm Lv status not available

4 · lvdisplay not available

5 · dracut lvm command not found

6 · Lv status not found

7 · Lv status not available after reboot Linux

adidas Originals CAMPUS 00S - Sneakers laag - core black/footwear white/off-white

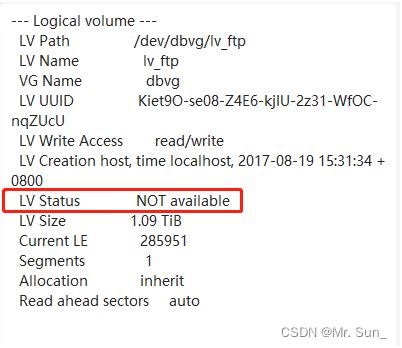

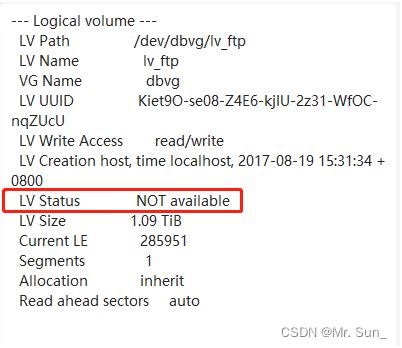

lv status not available after reboot*******Activate the lv with lvchange -ay command. Once activated, the LV will show as available. # lvchange -ay /dev/testvg/mylv Root Cause. When a logical volume is not active, it will show as NOT available in lvdisplay. Diagnostic Steps. Check the output of the lvs .When you connect the target to the new system, the lvm subsystem needs to be notified that a new physical volume is available. You may need to call pvscan, vgscan or lvscan . A vgchange fixes it but currently - until I have time for a deep dive - I can only boot through a rescue boot and this manual action. Ubuntu 20.04, lvm versions: ```$ .I have the same issue. Dell hardware, 2x SSD in RAID1 with LVM for boot (works perfectly), 2x SSD in RAID1 with LVM for data. The data LV doesn't activate on boot most of the . The problem is that after a reboot, none of my logical volumes remains active. The 'lvdisplay' command shows their status as "not available". I can manually .you might run vgscan or vgdisplay to see the status of the volume groups. If a volume group is inactive, you'll have the issues you've described. You'll have to run vgchange . "LV not available" just means that the LV has not been activated yet, because the initramfs does not feel like it should activate the LV. The problem seems . After a reboot the logical volumes come up with a status "NOT Available", and fail to be mounted as part of the boot process. After the boot process, I'm able to .

Activate the lv with lvchange -ay command. Once activated, the LV will show as available. # lvchange -ay /dev/testvg/mylv Root Cause. When a logical volume is not active, it will show as NOT available in lvdisplay. Diagnostic Steps. Check the output of the lvs command and see whether the lv is active or not.

When you connect the target to the new system, the lvm subsystem needs to be notified that a new physical volume is available. You may need to call pvscan, vgscan or lvscan manually. Or you may need to call vgimport vg00 to tell the lvm subsystem to start using vg00, followed by vgchange -ay vg00 to activate it.

A vgchange fixes it but currently - until I have time for a deep dive - I can only boot through a rescue boot and this manual action. Ubuntu 20.04, lvm versions: ```$ dpkg -l|grep -i lvm2. ii liblvm2cmd2.03:amd64 2.03.07 .I have the same issue. Dell hardware, 2x SSD in RAID1 with LVM for boot (works perfectly), 2x SSD in RAID1 with LVM for data. The data LV doesn't activate on boot most of the time. Rarely, it will activate on boot. Entering the OS and running vgchange -ay will activate the LV and it works correctly. The problem is that after a reboot, none of my logical volumes remains active. The 'lvdisplay' command shows their status as "not available". I can manually issue an "lvchange -a y /dev/" and they're back, but I need them to automatically come up with the server.you might run vgscan or vgdisplay to see the status of the volume groups. If a volume group is inactive, you'll have the issues you've described. You'll have to run vgchange with the appropriate parameters to reactivate the VG. Consult your system documentation for the appropriate flags. "LV not available" just means that the LV has not been activated yet, because the initramfs does not feel like it should activate the LV. The problem seems to be in the root= parameter passed by GRUB to the kernel command line as defined in /boot/grub/grub.cfg. After a reboot the logical volumes come up with a status "NOT Available", and fail to be mounted as part of the boot process. After the boot process, I'm able to "lvchange -ay ." to make the logical volumes "available" then mount them. I was using a setup using FCP-disks -> Multipath -> LVM not being mounted anymore after an upgrade from 18.04 to 20.04. I was seeing these errors at boot - I thought that is ok to sort out duplica.

sys_exit_group. system_call_fastpath. I added rdshell to my kernel params and rebooted again. After the same error, the boot sequence dropped into rdshell. at the shell, I ran lvm lvdisplay, and it found the volumes, but they were marked as LV Status NOT available. dracut:/#lvm lvdisplay.

Activate the lv with lvchange -ay command. Once activated, the LV will show as available. # lvchange -ay /dev/testvg/mylv Root Cause. When a logical volume is not active, it will show as NOT available in lvdisplay. Diagnostic Steps. Check the output of the lvs command and see whether the lv is active or not.

When you connect the target to the new system, the lvm subsystem needs to be notified that a new physical volume is available. You may need to call pvscan, vgscan or lvscan manually. Or you may need to call vgimport vg00 to tell the lvm subsystem to start using vg00, followed by vgchange -ay vg00 to activate it. A vgchange fixes it but currently - until I have time for a deep dive - I can only boot through a rescue boot and this manual action. Ubuntu 20.04, lvm versions: ```$ dpkg -l|grep -i lvm2. ii liblvm2cmd2.03:amd64 2.03.07 .

lvm Lv status not availableI have the same issue. Dell hardware, 2x SSD in RAID1 with LVM for boot (works perfectly), 2x SSD in RAID1 with LVM for data. The data LV doesn't activate on boot most of the time. Rarely, it will activate on boot. Entering the OS and running vgchange -ay will activate the LV and it works correctly. The problem is that after a reboot, none of my logical volumes remains active. The 'lvdisplay' command shows their status as "not available". I can manually issue an "lvchange -a y /dev/" and they're back, but I need them to automatically come up with the server.you might run vgscan or vgdisplay to see the status of the volume groups. If a volume group is inactive, you'll have the issues you've described. You'll have to run vgchange with the appropriate parameters to reactivate the VG. Consult your system documentation for the appropriate flags. "LV not available" just means that the LV has not been activated yet, because the initramfs does not feel like it should activate the LV. The problem seems to be in the root= parameter passed by GRUB to the kernel command line as defined in /boot/grub/grub.cfg. After a reboot the logical volumes come up with a status "NOT Available", and fail to be mounted as part of the boot process. After the boot process, I'm able to "lvchange -ay ." to make the logical volumes "available" then mount them.

I was using a setup using FCP-disks -> Multipath -> LVM not being mounted anymore after an upgrade from 18.04 to 20.04. I was seeing these errors at boot - I thought that is ok to sort out duplica.

lv status not available after reboot lvm Lv status not availablesys_exit_group. system_call_fastpath. I added rdshell to my kernel params and rebooted again. After the same error, the boot sequence dropped into rdshell. at the shell, I ran lvm lvdisplay, and it found the volumes, but they were marked as LV Status NOT available. dracut:/#lvm lvdisplay.

Activate the lv with lvchange -ay command. Once activated, the LV will show as available. # lvchange -ay /dev/testvg/mylv Root Cause. When a logical volume is not active, it will show as NOT available in lvdisplay. Diagnostic Steps. Check the output of the lvs command and see whether the lv is active or not.lv status not available after rebootWhen you connect the target to the new system, the lvm subsystem needs to be notified that a new physical volume is available. You may need to call pvscan, vgscan or lvscan manually. Or you may need to call vgimport vg00 to tell the lvm subsystem to start using vg00, followed by vgchange -ay vg00 to activate it. A vgchange fixes it but currently - until I have time for a deep dive - I can only boot through a rescue boot and this manual action. Ubuntu 20.04, lvm versions: ```$ dpkg -l|grep -i lvm2. ii liblvm2cmd2.03:amd64 2.03.07 .I have the same issue. Dell hardware, 2x SSD in RAID1 with LVM for boot (works perfectly), 2x SSD in RAID1 with LVM for data. The data LV doesn't activate on boot most of the time. Rarely, it will activate on boot. Entering the OS and running vgchange -ay will activate the LV and it works correctly.

The problem is that after a reboot, none of my logical volumes remains active. The 'lvdisplay' command shows their status as "not available". I can manually issue an "lvchange -a y /dev/" and they're back, but I need them to automatically come up with the server.you might run vgscan or vgdisplay to see the status of the volume groups. If a volume group is inactive, you'll have the issues you've described. You'll have to run vgchange with the appropriate parameters to reactivate the VG. Consult your system documentation for the appropriate flags.

"LV not available" just means that the LV has not been activated yet, because the initramfs does not feel like it should activate the LV. The problem seems to be in the root= parameter passed by GRUB to the kernel command line as defined in /boot/grub/grub.cfg. After a reboot the logical volumes come up with a status "NOT Available", and fail to be mounted as part of the boot process. After the boot process, I'm able to "lvchange -ay ." to make the logical volumes "available" then mount them.

Van strakke fit tot lossere pasvorm, bij adidas vind je een scala aan modellen, zoals slim fit, regular fit en loose fit, en geniet je van de optimale bewegingsvrijheid die adidas-trainingsbroeken bieden.Van strakke fit tot lossere pasvorm, bij adidas vind je een scala aan modellen, zoals slim fit, regular fit en loose fit, en geniet je van de optimale bewegingsvrijheid die adidas-trainingsbroeken bieden. Met details als .

lv status not available after reboot|lvm Lv status not available